With self-driving cars, powerful AI-like facial recognition powering our smartphones, and machine learning inside of transportation apps like Uber, it seems like we already live in a world run by robots. However, many still believe that there remains a clear division between “human” and “non-human.” Sure, robots may be able to drive along a street or play a specific song when asked, but humans claim the realm of emotion and empathy for ourselves. But recent robotic innovations suggest that such traits may not be so unique to humans after all.

Ph.D. student Boyuan Chen and professor of mechanical engineering and data science Hod Lipson at Columbia University are among the researchers seeking to demonstrate just that—starting by giving robots the ability to predict behavior based on visual processing alone.

“We’re trying to get robots to understand other robots, machines, and intelligent agents around them,” Lipson said. “If you want robots to integrate into society in any meaningful way, they need to have social intelligence—the ability to read other agents and understand what they are planning to do.”

Theory of mind, the ability to recognize that others have different mental states, goals, and plans than your own, is an integral part of early development in humans, appearing at around the age of three. Allowing us to understand the mental state of those around us, theory of mind acts as the basic foundation for more complex social interactions such as cooperation, empathy, and deception.

In children, it can be observed in successful participation in “false-belief” tasks, such as the famous Sally-Anne test, in which the participant is asked questions to see if they understand that two fictional characters, Sally and Anne, have different information thus different beliefs. If a child is able to recognize that different information is known to different people, this is a strong indicator that they possess theory of mind. As children develop further, they naturally develop the social skills needed to navigate the world around them.

“We humans do this all the time in lots of subtle ways,” Lipson said. “As we communicate with each other, we read facial expressions to see what the other person is thinking.”

It is this very ability that Chen and Lipson hope to one day give to robots. To do so, however, they must first start with the basics of theory of mind. After all, what comes so easily to us as humans is not so easily produced in robots. In their recent research, they sought to find evidence that theory of mind is preceded by something called “visual behavior modeling.” In essence, they wanted to see if robots could understand and predict the behaviors of another agent purely from visual analysis of the situation.

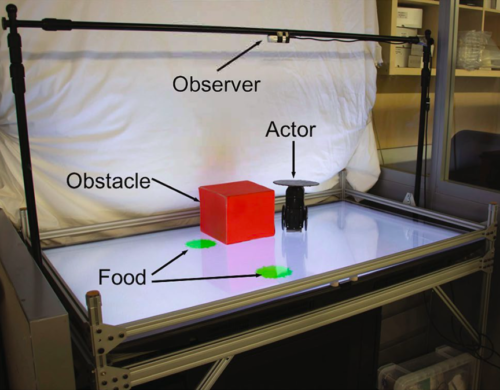

In their experiments, the researchers used a simple setup with a physical robot “actor” and “observer,” in which the observer, via a camera above, had a complete visual of the actor’s surroundings, including a green dot and sometimes a barrier object. The actor robot would only pursue the green dot if it was visible from its point of view. If a barrier was blocking the view of the green dot, the actor robot would not move. Most importantly, the observer robot had no prior knowledge of the actor’s intentions to pursue the green dot or the coordinates of any of the objects in the setup. The only information given to the observer robot was the raw camera view. Everything else would have to be discovered and understood by the robot.

Such an experiment differed from other research in the field because the observer robot used purely visual inputs and explicitly modeled the long-term expected behavior of the actor. Previous experiments gave the observer robot symbolic information, such as the coordinates of the actor robot and green dot, that would make the observer robot’s job much easier. Furthermore, Lipson and Chen carried out their experiments in the real world, rather than in simulations, which added new challenges but ultimately gave their findings more substance for application in existing technologies.

After being trained with 2400 input-output image pairs of the actor robot’s actions given different scenarios, the observer robot was presented with a new scenario and asked to produce a single image showing the predicted long-term path of the actor robot. The researchers hypothesized that the observer robot could only be successful if it had the ability to visualize the point of view of the actor robot and understand from limited information that the actor robot was pursuing the green dot only when it was in its field of vision—in other words, if the observer had theory of mind.

To Chen and Lipson’s delight, the observer robot had a 98.5 percent success rate in predicting the path of the actor robot. This means that the observer robot was able to understand the actor robot’s point of view, learn its intentions, and predict its trajectory from visual analysis alone. In other words, the observer robot understood that the actor robot had different information and thus a different way of thinking and behaving. Interestingly, such results hint at how our ancestors may have evolved theory of mind, and that they too perhaps once used a purely visual system to predict the behaviors of other beings.

“This was a very remarkable finding,” Lipson said. “This is the first step towards giving machines the ability to model themselves, for them to have some type of self-awareness. We’re on a path towards more complex ideas, like feelings and emotions.”

From their findings, Chen and Lipson are optimistic about how robotic theory of mind can help create more reliable machines. For example, driverless cars will be much more effective if they can read the nonverbal cues of other cars and pedestrians in their surroundings. Chen also reflects on a funny story from his stay at an intelligent hotel in China, in which a robot delivered the wrong food to his room and could not recognize its mistake. According to Chen, such errors could be avoided if robots were trained with some social awareness and ability to understand what other agents—in this case, their customers—are thinking.

Ultimately, the researchers hope to create a machine that can model itself, leading to introspection and self-reflection that can advance the social integration of robots into human society. In the short term, however, they are working on making the experimental situations more complex for the observer robots. For example, what will happen if two robots are modeling each other at the same time? If there is some type of challenge introduced, will they take part in manipulation and deception?

Of course, both Chen and Lipson understand that giving such capabilities to robots is a double-edged sword. Both agree that discussion about the ethics of AI are important across all fields, not just science. For example, issues of privacy and surveillance, risks of manipulating or influencing human behavior, and the distribution of access to such powerful technologies are all very important as we move forward with AI.

However, Chen remains optimistic about the future of robots and how they will contribute to our lives.

“I’m so excited about this area of research,” Chen said. “Even though people may be afraid that AI will become a threat to humans, I really view it as a tool and resource that will be used to improve our quality of life. Eventually, it will become just like electricity—so integrated into our lives that we can’t even feel it.”

Acknowledgements:

Chen, Boyuan, Carl Vondrick, and Hod Lipson. “Visual Behavior Modelling for Robotic Theory

of Mind.” Scientific Reports 11, no. 1 (2021). doi:10.1038/s41598-020-77918-x.