Over the past year and a half, our hospitals, overwhelmed by COVID-19 patients desperate for oxygen, have been debilitated by staff and resource shortages. While many called for vaccines as a hopeful cure-all, some recognized a faster alternative: efficient and deliberate distribution of hospital resources. Fourth-year PhD candidate Amogh Hiremath and Professor of Biomedical Engineering Anant Madabhushi at Case Western Reserve University were among the bioengineers who confronted this problem. “It’s particularly heart-wrenching, as a father myself, to see pediatric wards filled up… kids [who] require critical surgeries just don’t have a bed,” Madabhushi said. Recognizing that delayed or inaccurate risk assessments could prove fatal, Hiremath and Madabhushi developed CIAIN (integrated clinical and AI imaging nomogram), the first deep-learning algorithm to predict the severity of COVID-19 patients’ prognoses based on patient CT lung scans as well as clinical factors.

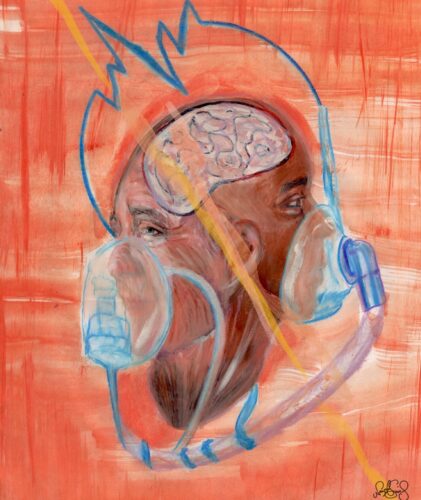

Artificial intelligence, at its core, endeavors to mimic processes within a human brain. Similar to how humans take lessons from past experiences and apply them to novel situations, computers “learn” information from a training set and apply it to a testing set. In the case of a prediction algorithm like CIAIN, computers are initially fed information from existing patient data to correlate features of CT scans and clinical test results with patient prognoses. Once the algorithm is trained, it can then be applied to novel patient information—the testing group—and give prognoses with a high degree of precision. CIAIN is the “first prediction algorithm to use a deep learning approach in combination with clinical parameters,” Hiremath said. This makes it more accurate than algorithms using imaging alone. Another major advantage of CIAIN lies in its speed of deployability: given that accessing medical datasets is relatively difficult compared to obtaining a set of natural images, Hiremath and Madabhushi used roughly one-thousand patient scans from hospitals in Cleveland, Ohio and China to train, fine-tune, and test their model. And notably, CIAIN is the first algorithm designed for COVID-19.

Given that their paper only examined unvaccinated patients, Madabhushi and Hiremath now want to investigate if they can find the risk of hospitalization for vaccinated individuals. “As we hear about new breakthrough infections, the question is if we need to run the analysis retrospectively on patients who have been vaccinated,” Madabhushi said. However, while it is one thing to create predictive algorithms retrospectively, it is another to apply such algorithms to novel patient data without prior physician evaluation. A prospective study—a study that follows patients before their ultimate outcomes are known—would employ a dual-pronged approach. First, the researchers would evaluate the algorithm in the pilot phase of a prospective non-interventional trial, where radiologists would upload a CT scan and the algorithm would generate a risk score for a patient. In a few months, if the tool performed well, the study could then transition into a prospective interventional form, and the researchers could propose the algorithm to the FDA for clinical approval.

Despite anticipating the usage of CIAIN in the emergency room, Madabhushi was careful to emphasize the limited role even very advanced algorithms can play in clinical settings. The vast majority of AI algorithms in the foreseeable future are intended to be decision support tools; they merely augment and complement the physician’s interpretation by aggregating data and prognosticating patient outcomes more accurately. Ultimately, only physicians interact with patients and thus, are the best individuals to make treatment decisions. Madabhushi likened the role bioengineers like himself and Hiremath play in healthcare to the role aircraft engineers play in improving functionalities on the console of an airplane. Ultimately, the physicians are the pilots in the cockpit.

No discussion on novel AI technology is complete without considering possible biases in the model and the effects of such biases. Imagine an algorithm trying to classify whether or not an object is ice cream. If, in training the algorithm, one only feeds it images of vanilla ice cream in a cone, the algorithm is likely to reject images of any other flavor, since it is not used to classifying anything but vanilla ice cream cones as ice cream. Simply put, algorithms are biased if the correlations they have learned from a certain training set (vanilla ice cream cones) can’t be extrapolated to the testing set (ice cream of all types).

While this example may be innocuous, biases in models used in healthcare can have life-or-death consequences. This year, the American Society of Nephrology finally updated their model for calculating glomerular filtration rate, which was originally based on assumptions derived from Caucasian patients. Their old model was found to make inaccurate calculations for African Americans, culminating in frequent misdiagnoses of chronic kidney disease.

Even if AI just provides a single data point for physicians to use in decision-making, AI predictions are often given precedence over other data points due to the complex methodology by which models aggregate information. Hence, ensuring that AI predictions are as accurate and unbiased as possible is crucial.

Even without prompting, Madabhushi and Hiremath highlighted the methods by which they attempted to avoid introducing biases to CIAIN. “We were very deliberate and purposeful in making sure the data was collected from a few different sites,” Madabhushi said. Diversifying the source of data generalized the algorithm and also reduced the likelihood of a “leakage problem,” a known biasing factor AI models face when data is poorly separated between the training set and testing set. The resulting overlap means the algorithm will learn the training set well and accurately classify the testing set, but will demonstrate poor accuracy in classifying a new “validation” testing set because it hasn’t learned enough variation. Both Hiremath and Madabhushi expressed the need for further validation to verify CIAIN is sufficiently generalizable.

While generalizing models might help decrease bias, it is not a fix-all. With African American patients three-times more likely to die from COVID-19 than Caucasian patients, an algorithm trained on a mixed-race group may fail to accurately predict prognoses for either group. Scientists must integrate how social determinants of health—including ethnicity, race, and socioeconomic status—play a role in disease manifestation and prognosis. “While we haven’t explicitly explored these factors with our methodology and platform yet, it is definitely something we want to look at,” Madabhushi said, who is of the strong belief that scientists need to get away from the idea that “one model fits all.” In fact, Madabhushi and Hiremath have compared the accuracy of models specific to different ethnic groups for breast, uterine, and prostate cancer—in each case, the model designed for the subpopulation yielded more accurate predictions than a more general model. Madabhushi expresses hope that “[scientists] will get to the point where there is a buffet of models and a physician can selectively invoke a model based on the ethnicity or other attributes of their patient. Otherwise, we are doing a disservice to underrepresented populations.”

In theory, the future of AI in healthcare seems clear: scientists must identify differences among populations and incorporate them into increasingly specific algorithms to minimize bias. But its implementation remains challenging: one of the biggest hindrances scientists face is a lack of data from underrepresented populations. Until this data can become readily available via drastic institutional and structural change, it is up to scientists like Hiremath “to improve the current prediction models in a step-by-step manner, improve the biases that are involved, and create a usable product.”

As AI becomes increasingly ubiquitous in healthcare, there are fears that biased and over-generalized algorithms are being put into practice faster than refined and population-specialized algorithms are being created. We must remember that the personalized aspect of medicine—the conversations, interactions, and human observations—are just as, if not more, important than an algorithm’s score. AI can be a fantastic passenger-seat navigator to a physician driver. But society must be careful not to let AI take the wheel, lest the tool meant to improve patients’ survival endangers it instead.